General framework

Trying to understand the processes that turn us into concious and intelligent beings is an activity that has occupied the mind of scientists and philosophers since immemorial times. Last century technological explosion together with the birth of an interdisciplinary practice of the study of human cognitive processes (where psychology, neuroscience, philosophy and computational sciences converge) have allowed artificial intelligence to try to model psychologically plausible, computational representations of these processes.

These computational models intend to emulate cognitive systems that perform complex tasks in the real world. The search for computational cognitive systems that are capable to reproduce human behavior requires interdisciplinary work. The goal of our research is the study of original mathematical models that include control, perception and decision models, implemented in autonomous artificial agents (robots), in order to generate coherent behavior in their environment. At the same time, the results should provide a better understanding of the functioning of the human brain in low-level processes.

Multi-Modal Representations

Throughout its short history, artificial intelligence has had at least one significant change of direction in its quest for finding and understanding the processes underlaying that very elusive human trait. In the last decades, together with the rest of the sciences studying cognition, the shift in paradigm has been towards the rediscovery of the importance the body of agents has on the development of cognition.

In what has become known as embodied cognition, it is widely accepted now that, to be able to understand and replicate intelligence, it is necessary to study agents in their relation to their environment. The subjects of study should be agents with a body, that learn through interaction with their environment and that this learning should be a developmental process.

Pursuing the same aims, cognitive robotics takes its inspiration from studies of cognitive development in humans. Artificial agents or robots, by having a body and being situated in the real world, assure a continuous and real-time coupling of body, control and environment. It is these characteristics that have made robots the ideal platforms to try and reproduce and study the basis of cognition. Theories of grounded cognition have also had a great impact on this quest. In general, these theories reject the use of modal symbols for the representation of knowledge, focusing on the role of the body for its acquisition. More importantly for our purposes, grounded cognition focuses on the role of internal simulations of the sensorimotor interaction of agents with their environment.

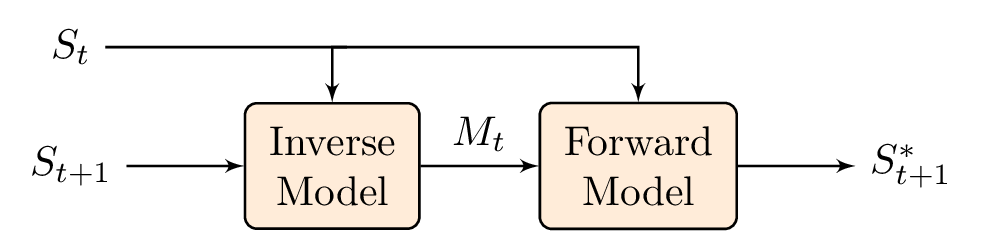

In the quest of a basic internal simulation mechanism forward and inverse models have been proposed. A forward model is an internal model which incorporates knowledge about sensory changes produced by self-generated actions of an agent. Given a sensory situation St and a motor command Mt (intended or actual action) the forward model predicts the next sensory situation St+1. While forward models (or predictors) present the causal relation between actions and their consequences, inverse models (or controllers) perform the opposite transformation providing a system with the necessary motor command ( Mt ) to go from a current sensory situation ( St ) to a desired one ( St+1 ).

In the cognitive sciences, these processes have been found to be capable of modeling several behaviours and characteristics of the brain.

Most research in our lab revolves around the use and exploration of internal models, attempting to solve issues such as:

- how far can we exploit the characteristics and capabilities of these models?

- what can the models allot artificial agents?

- which is the best learning strategy to code these models?

- what are the benefits for the different levels of abstraction and analysis of the models?

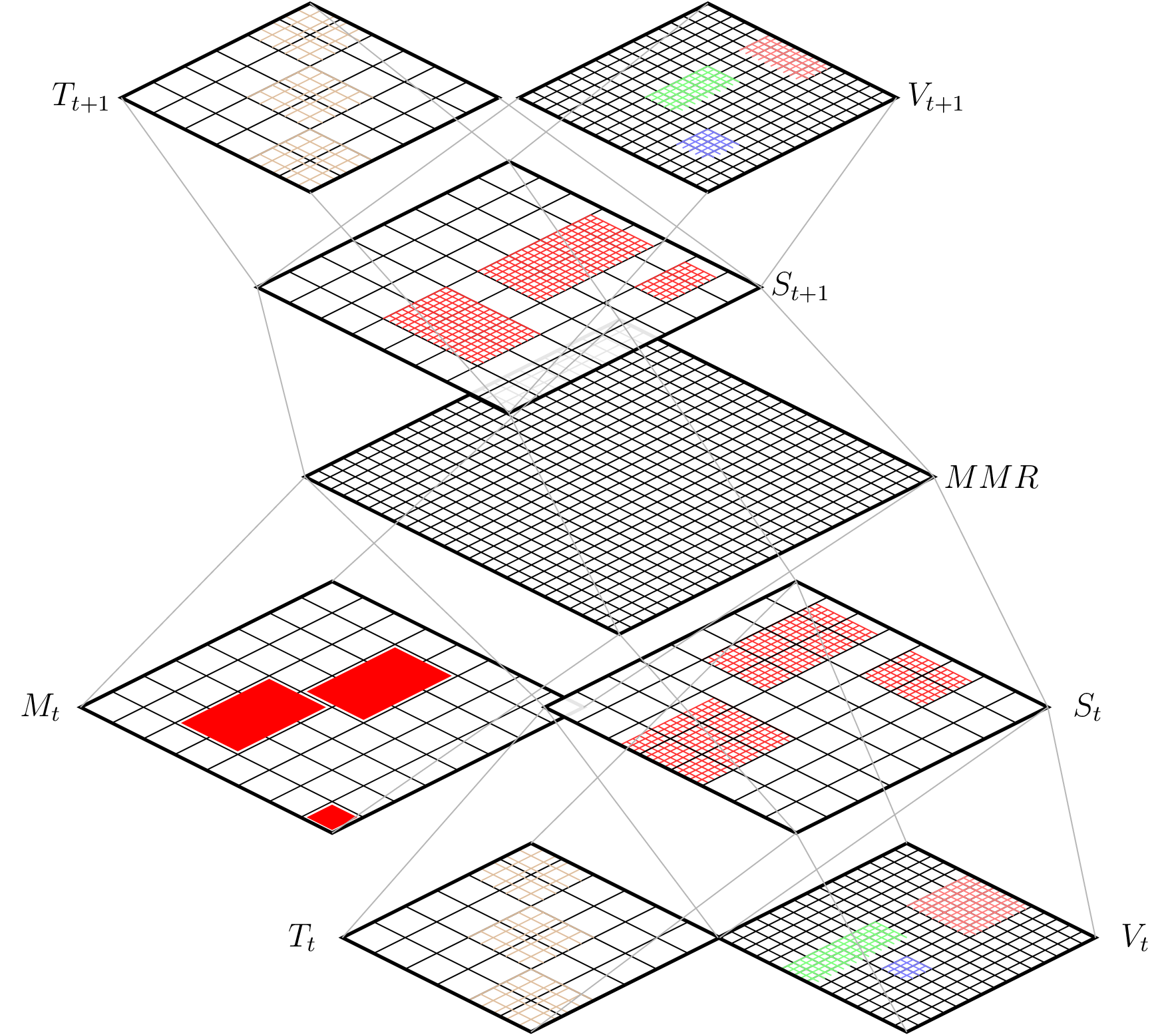

A proposed architecture is shown as SOIMA in the following figure and should be capable of working as both a forward and an inverse model. On the presence of St and Mt, the layers activate the architecture upwards forming a Multimodal Representation of the event. At the same time the activation in the central layer would then cause the upper layer to signal St+1. This flow of information would serve then as a forward model with flow in the opposite direction working as an inverse model. With this bidirectional flow of information an interesting addition to the state of the art are the consequences of activation only on the Multimodal Representation layer. The hypothesis is that this sort of activation would mimic mental imagery and the rehearsal of sensorimotor schemes. Information and data are transferred in all directions, encoding Multi-Modal Representations (MMR) formed by whatever type of modalities constitute the different sensory situations (S) and the motor commands (M). It is worth noting that the proposal is not addressing representations in the known GOFAI (good old-fashioned artificial intelligence) sense, as amodal, abstract entities that encode knowledge or concepts, but rather as the gathering and clustering of data coming from different modalities being these sensory or motor channels of information.

We have implemented and tested these models using both, simulated and real robots aiming at solving different basic robotics problems such as:

- aquiring basic knowledge about the surrounding such as the notion of distance to objects and the aquisition of tri-dimensional perception for safe navigation using a mobile robot

- aquiring a self map in a humanoid robot as well as an extension of this when using tools

- characterisation of the kinematic properties on a humanoid robot, alloting this with, among other thigs the capability of self-other distinction